How is the creation of computer graphics in film and animation? How is the rendering farm arranged and how does it work? And why are AMD EPYC processors good for these tasks, but why is the answer to this question not as obvious as it seems? We are analyzing on the example of test servers intensively used by the famous Russian studio of visual effects and animation CGF.

If you immediately remembered the victories of AMD EPYC or Ryzen in CINEBENCH and decided that everything is clear here, then take your time - work on CG-effects and animation in reality is not so easy and the presence of multiple cores still solves nothing. With the support of AMD Moscow office, ASBIS distributor and CGF studio we prepared a material about the studio's subtleties and its experience of trial operation of new AMD EPYC 7002 series processors, which the studio tested to assess the possibility of their further use in the server park.

The studio has hundreds of works on its books - from special effects for feature films to short commercials, as well as fully animated pictures. However, even the so-called VFX, from which the viewer learns what was real and what was not, does not fully reflect the depth and complexity of creating even a few exciting seconds of video, which in reality can take hours or even days. It will not be possible to tell about all the subtleties and nuances, because the studio has its own secrets, but you can get a general idea about the processes.

Working process

It is not easy to describe a typical scenario of the studio's work on special effects for a movie, as everything depends on the specific project. In general, the task is to process the original shooting material - add missing objects, delete unnecessary ones or correct the filmed ones. Source video footage from shooting may comprise dozens of terabytes of data. As a rule, such video is stored on a set of LTO tapes. Along with increasing capacity of tape drives, the quality of video, its resolution and color depth are also growing, so one LTO-5 cassette with the size of 1.5 terabytes can hold only 5-10 minutes of recording (without compression).

In the most complex case, everything starts with digitizing physical or simulations of future "artificial" objects in the frame from scratch, as well as simulations, i.e. physical calculations of behavior and interaction of such objects. This is followed by rendering - drawing of the modeled objects. Compositing - combining calculated and drawn objects with the original video at the very end. Behind such formally simple description, of course, dozens of hours of work of a large number of specialists are hidden.

For initial modeling of objects, graphic stations are used, mainly from Autodesk Maya. For simulation, physical calculations, rendering and so on is responsible SideFX Houdini. For compositing the studio uses The Foundry Nuke. For each software has separate additional plug-ins and modules. In addition, there is its own local tools. Each stage is divided into separate tasks, units are quite independent from each other calculations. Almost all these tasks get on the render-farm.

"The problem of many small Hollywood studios is the limited budgets for the purchase of new server farms and workstations. And in the case of CGF, the cycle of updating the equipment, however, quite inexpensive, can be from 4 to more than 7 years. For economic profitability, quality of work and speed of order execution, the studio needs to make the most efficient use of all existing equipment," says Kirill Kochetkov, CGF's CTO studio, under whose leadership testing of new platforms took place.

As a result, CGF render farm is not fully unified, it consists of several types of once expensive blades and server systems. In free time, individual graphic stations of employees are also connected as additional nodes of the farm. And when choosing a new "hardware" it is important to select processors and platforms for specific loads and maintain a balance, because the tasks described above, noticeably different requirements to resources: single and multithreading, IPC core, memory bandwidth, and so on.

Many simulation tasks are very resource intensive - the count time per frame can be several hours. At the same time, there are both scripts that use all processor resources (8-24 threads) and specific tasks that actually use only one thread and for which the frequency and IPC of one core comes to the fore. These include some physical calculations where each next state is directly dependent on the previous one - for example, the disintegration of an object into parts with their subsequent interaction between themselves and with the environment, which they also influence. On average, the rendering itself parallels quite well.

For the majority of tasks, calculations on CPU are used mainly. In some cases, GPUs can provide acceleration, but this is not a universal solution. If a particular project is properly funded, some studios build systems with gas pedals for specific software. In the case of CGF, adding the GPU to the studio's existing farm no longer makes much sense, due to the obsolescence of the server part and the accumulated tools.

The second important resource is RAM. Its consumption depends on a specific task (and even a specific frame), and if it is insufficient, imbalance and slowdown of the overall work may occur. In particular, a task may consume almost all the RAM of a node, loading only a small fraction of the available cores. Finally, the counting time increases by many times when the free RAM of the node is over and it is necessary to use swap files on the disks.

Finally, do not forget about the disk subsystem. The requirements for it also depend on the tasks. Some of them require a large amount of input data, but the output is very small. For others it is exactly the opposite (usually physics simulations) and for others the results of intermediate calculations take up a huge amount of space but the input and output are relatively small. Another nuance is that common caching technologies are not effective in most tasks, as files are usually read only once. Some algorithmic computations can be run very efficiently in the cloud or on a remote cluster, as there is no need to race dozens of terabytes of data on the drives and between nodes and storage.

To simplify the task distribution process, each of them has a specific weight that reflects the resource requirements. Each node of the farm, respectively, has a certain capacity. If, for example, a node has a capacity of 1000 conventional units (c.u.), it can process ten tasks of 100 c.u. each or two tasks of 350 c.u. and two tasks of 150 c.u. each at a time. But a 2000-square-unit task will no longer be able to cope with such a node, and it will not get to it.

"Maintaining a balance of available and required resources allows CGF to efficiently use available hardware capacity. And if the load is not distributed throughout the farm, it is a direct loss of money due to downtime or insufficient use of equipment. Ideally the entire farm should always be 100% loaded, and in practice this is what CGF wants," says CTO of CGF Studios.

Render Farm

CGF farm on the basis of blade systems (4U, 10 "blades") made it possible to denser the computational resources on the available areas of the studio. Currently, there are two versions of such nodes based on past generations of Intel Xeon:

dual-processor "blade" in two versions (hereinafter referred to as Blades 1 and Blades 2), but with the same configuration: 2 × Intel Xeon E5645 (6C/12T, 2.4-2.67 GHz, 80 W), 64 GB RAM;

The "blade" is more useful, also dual-processor (Blades 3): 2 × Intel Xeon E5-2670 (8C/16T, 2.6-3.3 GHz, 115 W), 128 GB RAM.

But that's not all, all the workstations in the studio are used when they are free from interactive use by employees. Their typical configuration includes an 8 or 12 thread high-frequency processor - Intel Core i7-6700K, i7-8700K or i7-9700K - and 64 GB of RAM. The total number of active nodes at peak reaches 150.

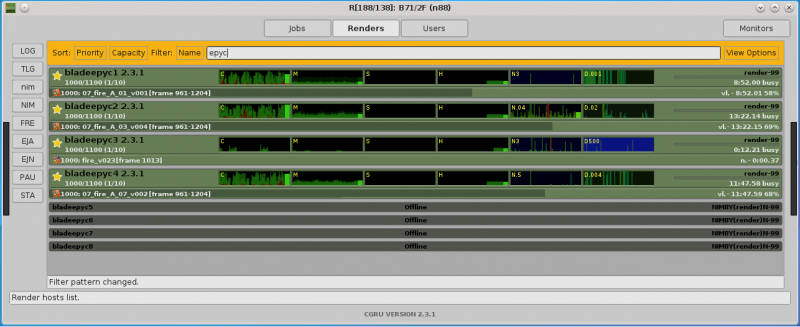

Simulation and rendering tasks, model calculation, as well as assembly tasks for the final image are launched on the farm. The rendering farm software runs on the Linux distribution Debian 10. The distribution of tasks is done by the CGRU manager, a popular open source software for rendering farm management. Because there are a lot of tasks and all of them are relatively independent of each other, the farm is ready to accept almost any suitable hardware and there is always something to load it with.

The load on the test server with virtualization and four VMs

That's why test servers based on modern AMD EPYC and Intel Xeon Scalable processors were added to this render-farm to assess the prospects of use. First of all, it was interesting to find out how the studio tasks will be performed on the most advanced single-socket AMD server with the maximum number of cores and two double-socket systems with AMD and Intel processors with approximately the same total number of cores per machine.

Unfortunately, at the time of testing it was not possible to get the most similar configurations with the new Intel Xeon Gold 6248R, which have more cores and higher frequency at a lower price (1ku RCP $2 700), and the new AMD EPYC 7F72 (1ku RCP $2 450) with the same number of cores but higher frequency. As a result, configurations with slightly simpler but cheaper processors were taken, which in terms of cost of CPU (see below) seems to be quite acceptable option for testing.

Single-processor server based on AMD EPYC 7702 (hereinafter referred to as EPYC x1):

Gigabyte R162-Z11-00 (1U) platform;

1 × AMD EPYC 7702 (64C/128T, 2.0-3.35 GHz, 180W);

512 Gbytes (8 × 64 Gbytes) DDR4-3200;

Seagate FireCuda 520 SSD (M.2, NVMe, 500 GB);

10GbE network controller;

2 × FP 1200W (80+ Platinum).

Dual-processor server based on AMD EPYC 7402 (hereinafter referred to as EPYC x2):

Gigabyte R282-Z91-00 (2U) platform;

2 × AMD EPYC 7402 (24C/48T, 2.8-3.35 GHz, 180W);

1 Tbyte (16 × 64 Gbytes) DDR4-3200;

Seagate FireCuda 520 SSD (M.2, NVMe, 500 GB);

10GbE network controller;

2 × FP 1600W (80+ Platinum).

Dual-processor server based on Intel Xeon Gold 6248 (hereinafter referred to as Xeon x2):

Gigabyte R281-3C2 (2U) platform;

2 × Intel Xeon Gold 6248 (20C/40T, 2.5-3.9 GHz, 150W);

768 Gbytes (12 × 64 Gbytes) DDR4-2933;

2 × Samsung PM883 SSD (SATA, 240GB; LSI RAID);

network controller 10GbE;

2 × FP 1200W (80+ Platinum).

All machines worked with the performance profile, and for AMD left one NUMA-domain per socket by default. All machines had the same software stack as the one used on the farm; there was no problem with it. Only software was actually on the local disks, while the working data on the external NFS storage was accessed via 10GbE network. All machines received a 1DPC memory configuration with the highest possible frequency for each platform. For the current tasks of the studio, calculated on the farm, this amount of memory is most often redundant, but in the tests it will certainly not be a limiting factor for performance.

Testing

On one machine of each type - EPYC x1, EPYC x2, Xeon x2, Blades 1, Blades 2 and Blades 3 - the same test scenarios were run, which include both exclusively synthetic loads, which in practice are only parts of larger tasks, and real calculations of those projects that the studio worked on during the test period of several weeks.

Synthetic tests

Physical calculations (simulations) of different types of objects and substances in SideFX Houdini software were used for initial assessment of nodes performance:

Cloth - fabric and clothing;

FLIP - fluid;

Grain - bulk solids;

Pyro - gas;

RBD - dynamics of solid bodies;

Vellum Cloth and Vellum Hair - dynamics of soft bodies, based on explicit links between points.

Such calculations are relatively fast and have different requirements for the number and frequency of cores. For example, the Cloth tool is of single thread nature, so the maximum frequency of a single kernel is important only for it. Other tools know how to parallelize calculations to a greater or lesser extent. In this test, there was exactly one calculation task per node.

The graph above shows the time it took the nodes to calculate each simulation of the above types. Such a large difference between the old and new nodes can be explained to a greater extent those architectural improvements in processors and platforms in general, which occurred over the past decade. If we compare only modern platforms, taking for a basic level of the results of the machine with Xeon, the difference is no longer such a huge, although very significant.

On average, the advantage of systems based on a single processor AMD EPYC is about 20%, and systems based on two processors AMD EPYC - 30%. Solutions with AMD processors are ahead of the competition in all tests, except for the first one, which, as mentioned above, differs by the fact that mathematical calculations in it work in single thread mode. Recall that the Intel Xeon Gold 6248 processor has a turbo frequency of 3.9 GHz, while AMD solutions have only 3.35 GHz. Probably, this explains the 10% gap in this test.

Practical Tests: Simulation and Render

The systems under test worked as part of the render-farm of the studio for several weeks, so it was possible to collect quite extensive statistics on tasks, which during this time were processed several hundred. Since, in general, the distribution of tasks by computational nodes was carried out in automatic mode, the most representative tasks were selected for the final report: with a large number of frames and counting time of one frame on the fastest machine not less than one minute.

In the vast majority of cases, servers based on EPYC were faster than the machine with Xeon, and a single socket EPYC-system was on average even slightly faster. On average, the advantage of systems based on AMD compared to the Intel server was 20-21%. But since these are real tasks that include different types of loads, the growth is not uniform everywhere. For example, in one case two AMD processors turned out to be three times faster than two Intel CPUs, in another case - almost twice slower. Such disparity is a direct consequence of the fact that not always single tasks are effectively scaled to a large number of cores.

In this case, it may be more advantageous to distribute "several tasks per node" rather than "one task per node", as in previous tests. To evaluate both approaches, a set of 10 frames for rendering was sent to each test system. In the sequential version, each frame was sent to servers one by one, and in the parallel version, all at once. The result is the total computation time. Testing showed that dual-processor servers with AMD and Intel in this task are about a third faster in case of simultaneous launch of frames for rendering, and single-processor AMD server - about 20%. In terms of overall speed, systems with AMD are leading.

In the next special test for a more accurate study of the paralleling efficiency, we used another rendering task, different from the past, consisting of eight frames. At the same time, the count time of each frame on the nodes of the studio farm (Blades) was usually 10-20 minutes depending on their performance. This task was run on a server with a single AMD processor (EPYC x1) with task management system settings for counting one, two, four and eight frames simultaneously. Thus, the first variant will be sequential and all others will be parallel.

Here you should pay attention to the work of the rendering software. One of the variants of automatic universal paralleling of its tasks is splitting of the target image field into several blocks and calculation of each separate rendering stream. In general, the user can choose for his task how many blocks it can or should be divided. In this case, renderer of each frame does not know that something else counts on the node, and focuses on the number of computational cores to select the number of threads to be launched for block counting.

As a result, it turns out that the simultaneous calculation of eight frames is already actively using the means of processors and the operating system to process the number of threads much larger than the number of cores. The table with the results shows that in this case you can get a good gain - the total time of getting the result is about a third less than if all frames were counted sequentially, although formally in both variants are actively using all processor cores.

Virtualization

As mentioned above, for some computing scenarios, using one powerful server with a large number of cores and a large amount of RAM may not be very efficient because of the complexity of managing heterogeneous tasks on it. In particular, this is due to differences in resources such as cores and RAM. The former have a direct impact only on the speed of calculations, but it may be simply impossible to predict and manage memory consumption of heterogeneous tasks.

In this case, you can consider the option of "slicing" one "big" server into several virtual ones with a given allocation of resources. This approach also allows you to adjust resource allocation on the fly, selecting the best option for current tasks. To test this approach, a server with a single AMD processor (EPYC x1) has been installed with an open Proxmox virtual machine management system.

For the EPYC x1 machine - 64 cores/128 threads and 512 Gbytes of RAM - virtual machines (VMs) with configurations ranging from 16 cores and 64,000 MB of RAM in eight pieces to one VM with 128 cores and 512,000 MB of RAM were used. Unlike previous tests with real servers, this scenario may have some performance limitations due to the placement of virtual machine disks on external storage connected via NFS over a 10 Gbit/s network connection.

Total count time

VM configuration on EPYC x1 1 frame 2 frames 4 frames 8 frames

8 VM: 16 cores + 64000 Mbytes 00:21:14 - - - -

4 VM: 32 cores + 128000 Mbytes 00:23:09 00:22:03 - - -

2 VM: 64 cores + 256000 Mbytes 00:26:53 00:24:45 00:23:30 -

1 VM: 128 cores + 512000 Mbytes 00:38:30 00:31:01 00:27:28 00:25:27

First of all, it should be noted that the best result in terms of speed is very little different from the best result obtained on a real system without virtualization. This suggests that multi-core AMD EPYC processors are well suited for such scenarios and will be able to cope with high loads. At the same time in virtualization is the best scheme of "8 virtual machines, counting each frame of its own". In general, we can say that solutions with AMD EPYC are most effective in terms of speed exactly in "overloaded" scenarios, when a large number of simultaneous resource-intensive tasks or threads are used.

Power consumption and density

Energy consumption and energy efficiency are just as important parameters when evaluating systems as their performance. Old blade systems have their own specifics: A 4U chassis with ten blades consumes about 3 kW (about 260-300 W per node) and requires separate cables for each of the four power supplies. By contrast, test systems are much less demanding - they only need two cables per 1U or 2U node. Comparison of the density and consumption of modern systems and old blade servers is given in recalculation of the 4U-volume rack space - that's how much space each available studio blade system requires.

During the testing, all three servers were configured to remotely monitor consumption using sensors built into the platform. The graph above shows the maximum and minimum power consumption. The former are the highest recorded stable values under load, and the latter were obtained in the absence of load on the servers. The higher efficiency of AMD solutions is probably due in part to a finer process.

The single-processor 1U system with AMD EPYC 7702 is the most interesting in terms of resource density. Four of these systems offer 256 cores with a total consumption of about 1.3 kW. While the old blade system (Blades 3) in the 4U chassis has 160 cores and, as mentioned above, consumption at 3 kW. That is, the modern AMD solution has 1.6 times more cores with more than twice the difference in consumption.

Two dual-processor 2U systems with AMD EPYC 7402 are slightly less dense: 96 cores at 950 W, that is 1.66 times less cores and more than three times less consumption compared to 4U blade (Blades 3). Finally, for the test system with Intel Xeon Gold 6248 under the same conditions of comparison, we get twice as many cores and a little less than three times the difference in power consumption.

In addition, test 1U/2U servers are less cooling intensive compared to blades and can be upgraded with GPUs (provided that the platform supports this feature), which can speed up some calculations or be used to organize VDI. If such versatility is not needed and even higher density is needed, 2U4N nodes can be used.

The cost of .

It is worth making a reservation at once that the calculation of the cost of test platforms is approximate, because usually such purchases are much more complex and take into account the entire project, rather than individual machines. ASBIS, which has provided servers for testing, has also provided prices for each of the base platforms (CPU + memory + chassis) in the case of a single purchase. Naturally, if more machines are purchased, the prices will differ.

Estimated cost of configurations, $

EPYC x1 EPYC x2 Xeon x2

Processors 7 435 2 × 2 185 2 × 3 050

Chassis 2 100 3 070 2 350

Memory 8 × 320 16 × 320 12 × 299

Total 12 095 12 560 12 038

The cost of all three platforms has the same order. Both AMD-systems in real load tests proved to be almost identical in performance and noticeably faster than Xeon servers. However, given the higher density and energy efficiency, it is the AMD single socket system that is the most profitable.

If we compare the actually tested systems with theoretically more suitable ones (Xeon Gold 6248R, EPYC 7702P and 7F72), the picture becomes even more interesting. AMD's pricing policy for P-series processors, which are suitable only for single-socket systems, aims to displace the two-socket configurations of Intel - at a lower cost of CPU can get a higher density and / or number of cores. And it concerns even more "simple" models, without the increased frequency and huge cache as in 7Fx2.

Processors, 1ku RCP $

EPYC x1 EPYC x2 Xeon x2

Test option 6 450 2 × 1 783 2 × 3 075

Optimal 4 425 (7702P) 2 × 2 450 (7F72) 2 × 2 700 (6248R)

At the same time, AMD consciously does not segment processors by all other parameters: any EPYC 7002 has 8 DDR4-3200 memory channels and 128 PCIe 4.0 lines as opposed to 6 DDR4-266/2933 and 48 PCIe 3.0 lines at Intel. And dual-processor AMD systems may be needed already to provide a higher base frequency per number of cores, when the need for memory capacity greater than 4 terabytes, for certain requirements to the size and memory bandwidth per core, as well as for HPC / AI-systems.

Conclusion

One of the main conclusions that the studio made for itself based on the results of the testing is not about "hardware" but software. To be more precise, it is fully compatible with AMD platforms. All software packages used by CGF worked without any problems or additional fine tuning. Including in more complex block and virtualized rendering scenarios. It is fears of software incompatibility that are still one of the psychological, not always reasonable barriers in the choice of hardware. There have also been no compatibility issues with the installation of additional network adapters or drives.

With hardware, AMD's strategy of promoting single socket systems in the case of the studio has hit its target exactly. Test platforms have one order of cost and at the same time much faster than the old blade systems, but the two-socket AMD system is still somewhat more expensive than the others. At the same time, both AMD platforms in the real studio simulation and rendering tasks are on average 20-21% faster than Xeon-based systems. If we take into account that using the P version of the processor in a single-socket AMD server will further reduce the cost, it becomes obvious that this platform is the most attractive in terms of price and performance ratio among all tested.

In addition, it is also more energy efficient and denser in terms of number of cores than other test platforms and old blade systems. Simplifying cabling and reducing electricity bills are undoubtedly very important aspects for the studio. The combination of all these factors led to the fact that even before the completion of all the tests in the rendering farm, the studio purchased another single-socket server based on AMD EPYC 7002 to explore its capabilities in other scenarios of the company's IT tasks.

According to the test results, the studio is considering the possibility of partial updating of the render-farm without a final rejection of blade systems, due to the use of single-processor AMD platforms with a height of 1U, but in a configuration other than the test system. The main factor is still the financials, as the studio's economy is largely tied to the requirements of the orders being fulfilled - the more and more technically complicated the projects are, the higher the requirements to the "iron".

In the current situation there is a need for a small, relatively inexpensive, but fast enough and versatile cluster for everyday studio needs. Including for development of new techniques and capabilities. Single super-heavy tasks of projects by the totality of many factors are most often economically more profitable to "offload" to external sites - large clusters or clouds.

"How much to hang in cores? The most optimal for us now are 1U systems with AMD EPYC 7502P 32-core processors (2.5-3.35 GHz, L3-cache 128 Mbytes, TDP 180 W). They strike a balance between cost, including chassis and memory, density, power consumption, performance and versatility to ensure efficient studio operation in the current environment," concludes CGF CTO.

0 Comments